What is Explainable AI (or XAI)

Explainable AI methods explain how Artificial Intelligence-powered decisions are reached

Previously, I explained why explanations are necessary for making your AI system human-friendly.

Think about it, you’re more likely to trust and consent to your employee if they give you a reason (I’m not coming into work today vs. I’m not coming into work today because I had to rush to the hospital).

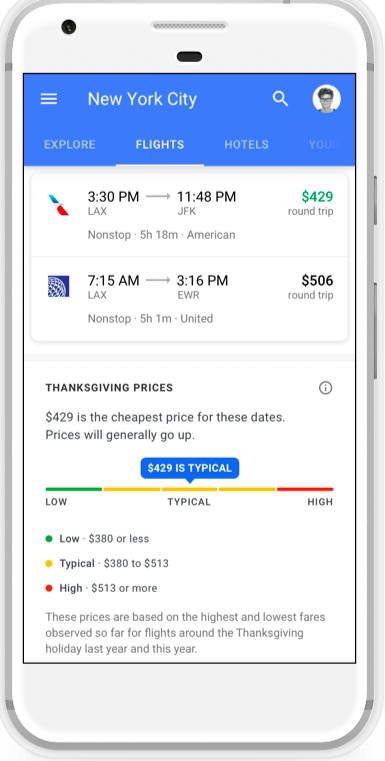

Google Flights price prediction

“429 is the cheapest price for these dates. Prices will generally go up.”

I delayed booking a flight back in 2019 because I had noticed the price drop from the original price. I thought, “it might continue dropping” but, Lo’ and behold, the price went up.

Google Flights addresses this issue by providing predictions showing whether a price will go up or down in the future. You can read more about how it works here.

Analysis of Google Flights explanations

The image above shows how Google used explanations to make it easier for users to understand and trust the predictions.

- Average price range

Seen in the bar chart and through text (“Low $380 or less…”).

When users ask “why did this happen”, what they actually mean is “why did this happen, instead of that.” So, if you’re rejected after a job interview, you may ask why was I rejected? What you mean is why was I rejected instead of accepted? What characteristics/experience/education would have let you accept me for the job? Lipton discusses this theory in his paper called Contrastive Explanations.

In this example, a user may wonder “In relation to what is $429 is cheap”. Cheap can be subjective. Google answers this by providing the lowest and highest values.

Here, I show you how I transformed an AI output to a human-friendly one by using this rule.

- Explain data source

“These prices are based on the highest and lowest fares observed so far for flights around Thanksgiving holiday last year and this year.”

Revealing data sources is one of the ways to improve your explanations. Google explains it in their explainability guidelines.

This can help a user gauge how much they should trust a prediction and prevent disappointment.

For example, if you ask a user to provide their age, income, and location so you can provide recommendations of the best gyms near them. They will be disappointed if they see that the recommendation is a gym far away when they know there’s a closer one. Telling the user you use their “location for predictions” may prevent this disappointment as the user realizes that their GPS is off.