Providing explanations to your AI algorithms can be useful. It gives users the ability to understand how a specific outcome was reached.

For example, your GPS system may recommend that you take route B, while you know that route A is shorter. This discrepancy is likely to make you doubtful of the route output provided by your GPS system.

An explanation such as one showing you the variables that went into the output result – e.g route calculation and live traffic data – may be the factor that makes it possible for you to trust your GPS outputs and the GPS provider’s brand.

In the paper Rationalization: A Neural Machine Translation Approach to Generating Natural Language Explanations authors showed how simply providing an explanation is not enough either. These explanations are often too technical, making it hard for lay users to understand AI explanations.

In their study, the authors suggested the need for AI rationalizations.

AI rationalizations

AI rationalizations are methods used to provide explanations as if a human performed the action (instead of a machine). In other words, explain the data-driven process in a way similar to how one human would explain it to another.

During their experiment, Ehsan, Harrison, Chan, and Riedl found that AI rationalizations made it easier for users to understand explanations provided, compared to simply showing XAI methods.

Explaining something to someone in a way humans explain things to each other, gives the user the ability to form relevant mental models – which are necessary for human understanding and reasoning.

But, on the contrary, they also found that some participants said that the AI rationalized method “talked too much” (it was an NLU experiment). And that, for this reason, they would have preferred a combination of the normal AI explanations, with the AI rationalizations.

The bad of AI rationalizations

Although these human-like explanations are beneficial, it is also necessary to use them effectively.

What I mean by this is that, though taking a human approach to technology is beneficial, it is still necessary to be mindful to not confuse the user.

Human-centered Artificial Intelligence is about asking the question: how can this technology be made to serve the human need better, as opposed to, how can this technology appear as though it’s a human.

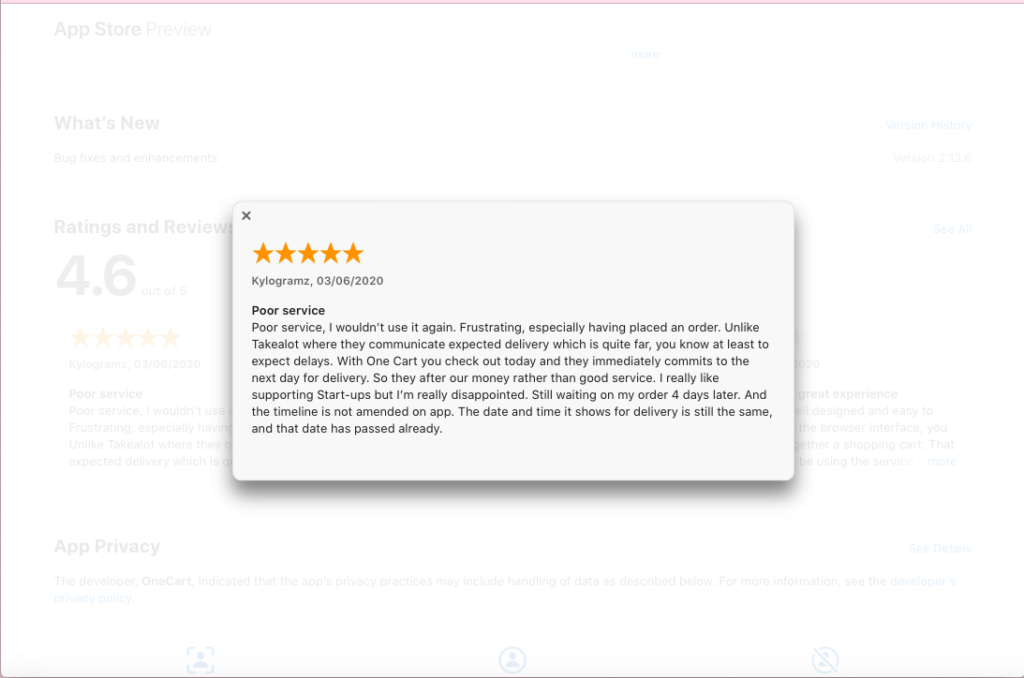

In the AI + People document, Google makes an example of this by showing how a chatbot that is indistinguishable from a human can confuse a user, making them think it’s a human rather than a robot. This then leads to the user placing an unrealistic expectation on the system, which can lead to unsatisfied customers.

Conclusion

Using explanations to create human-centered technology has the ability to improve your customer satisfaction levels.

But, there is a need to distinguish between human-centered technology, as well as technology that appears human. The latter has the ability to lead to cause customer disappointment.

You can find an example of how I created explanations for the feature importance XAI method here.

References

- Research study

- Google AI+People document